On Earth, humankind can step onto another continent, and without a thought, destroy the kindred civilizations found there through warfare and disease. But when they gaze up at the stars, they turned sentimental and believe that if extraterrestrial intelligences exist, they must be civilizations bound by universal, noble, moral constraints. — Liu Cixin, “Author’s Postscript” to The Three-Body Problem

I started rereading Liu Cixin's The Three-Body Problem to escape thinking about AI. Instead, I discovered that Liu's imagined catastrophes are a convenient metaphor for the superintelligences emerging from our own labs.

Here's what I mean: An alien intelligence threatens Earth, with technologies capable of uplifting, enslaving, or even extinguishing humankind. This is the central conflict in Liu’s trilogy, Remembrance of the Earth’s Past, and it is a situation that feels uncomfortably similar to our own.

Liu’s aliens are invading to escape their inhospitable planet. Our invaders are coming from within our own inventions, from electricity, computer chips, and large language models. But, as with Liu’s alien Trisolarans, this is not a surprise attack. We have time to prepare.

The problem is that we don’t have a guidebook. Analysts keep looking back to the industrial or information age revolutions, but none of those transformations offer a roadmap for artificial intelligences. All earlier revolutions were much slower, lacked the ability to redesign our genetic code, and unfolded naturally, not through human-assisted evolution.

We are left with no precedents to guide us, but Liu’s 1,500-page trilogy can help fill the void. His detailed exploration of the Trisolaran threat examines fictional problems strangely similar to those we may soon face.

The Fracturing of Humanity

It was impossible to expect a moral awakening from humankind itself, just like it was impossible to expect humans to lift off the earth by pulling up on their own hair. To achieve moral awakening required a force outside the human race.

When a civilization is threatened by an alien force, people don't just unite. They also fracture into contending groups, retreating into separate refuges of hope, despair, and defiance. Liu Cixin's trilogy is a masterful study of these factions. In our own era, facing the advent of AI, we're seeing similar divisions arise.

If you read the comments on any article about AI, you will see a version of these three factions. First, there are the adventists, who argue that only a non-human force can push people to "higher levels" and save humanity from itself. In Liu's world, this faction actually welcome the approaching alien invasion. They see humanity as an unruly, flawed species that needs to be disciplined and led by a superior force. They have a low opinion of the human majority, whom they believe lack knowledge of modern science and philosophy. Today, we see much the same thinking coming from accelerationists and transhumanists who believe that merging with machines represents the next step in our evolution, and that extinction is a real possibility if we fail to act.

Marc Andreessen's "Techno-Optimist Manifesto" reads like an adventist handbook, declaring that technology is the solution to every human problem and dismissing concerns about AI risk as "enemy action." Elon Musk, despite his warnings about AI dangers, simultaneously pushes for neural implants that would merge human and artificial intelligence, the ultimate accelerationist fantasy. Both see current humanity as a transitional phase toward something greater.

Other prominent contemporary accelerationists in the United States include Eric Schmidt, Peter Thiel, and David Sacks, who was appointed to be the first White House AI & Crypto Czar.

Then there are the defeatists. They believe that resistance is futile against a superior force like the Trisolarans or ASI. In Liu's world, this leads some to nihilism, a feeling that human existence is meaningless. Others turn to escapism, losing themselves in distractions and imaginary worlds. We see the same position in our doomers, who argue that AI's rise is an unstoppable train and that our species is doomed.

OpenAI's Sam Altman has acknowledged that AGI might kill everyone, yet continues development anyway, a kind of corporate nihilism that mirrors Liu's defeatists. Meanwhile, entire online communities have formed around "AI doomer" content, with some members retreating into video games and virtual reality as their preferred response to what they believe is out inevitable doom.

One prominent doomer view is concisely stated in the title of Eliezer Yudkowsky and Nate Soares’ new book, If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All. Humankind will not long survive after the arrival of ASI, these authors argue. And, since so many powerful individual, companies, and governments are rushing ahead to build it, our species is doomed.

Actual physical escapism is prominent in The Three Body Problem, as people try to flee into space to escape the Trisolaran threat. Jeff Bezos explicitly frames his Blue Origin space company as building "lifeboats" for civilization, while Elon Musk has called Mars colonization essential for humanity's survival, a future luxury reserved for those with the means to leave Earth behind.

Finally, the trilogy identifies another prominent faction, the survivalists, people committed to fighting against the Trisolarans even though success seems impossible. This group commits to saving some remnants of humankind, regardless of the cost. Many of both the most heroic and the most horrific acts that Liu describes are committed by members of this group.

Their modern-day counterparts are the resisters, who oppose the dominance of AGI and ASI (by celebrating and preserving what they believe is uniquely human. Some resisters are actively working to ensure that the development of AI is aligned with human values and that our species remains in control of its own future. Other resisters celebrate and ask others to celebrate what they believe only people can do, make art, create novel ideas, feel empathy, and act with compassion.

Liu describes other factions as well, and shows that people sometimes change positions, shifting their allegiances from adventism to defeatism to survivalism, and back again, as circumstances change. This mirrors the likely fluidity of our own world, where new factions will emerge and individuals will change their responses to AI over time. The human reaction will not be monolithic or static, but reflect an ongoing process of adaptation and change.

Liu is telling a story, examining possibilities, so he does not valorize one faction over the others. Still, he makes it clear that our problems ahead will likely lie less with AI than in our quarrels with one another.

Asymmetrical Knowledge

This world has received your message. I am a pacifist of this world. It is the luck of your civilization that I am the first to receive your message. I am warning you: Do not answer! Do not answer!! Do not answer!!!

The Trisolarans' ability to surveil and process every living person's information is a potent weapon in Liu's world. Now our own AI systems are racing to acquire similar powers.

As I discussed in Mind Reading Arrives Ahead of Schedule, we could all literally be reading each other’s minds one day. We could become like Trisolarans, who “do not have organs of communication.” Their brains instead automatically display their “thoughts to the outside world, thereby achieving communication.”

However, even if we can read one another’s unspoken thoughts, we may still be fundamentally unable to understand AGI and ASI. Their thoughts, goals, and methods may always remain a black box to us. Today’s AIs already process information at speeds and scales incomprehensible to a biological mind, and this chasm of understanding is likely to grow ever wider.

Similar comprehension gaps lead Liu’s characters to a series of devastating errors.

They see the Trisolaran “Droplet” as a symbol of peace, a diplomatic vessel so fragile it shimmers in the sun. They believe it's an emissary of goodwill, and in a moment of utter folly, they facilitate the destruction of thousands of human spaceships.

They believe the human Wallfacers can keep their plans secret, but the Wallbreakers uncover almost all their schemes, revealing the futility of human secrecy in the face of an intelligence that can see every move.

They choose Cheng Xin, a brilliant human who is also an empath, to be their Swordholder. They choose compassion over pragmatism, and this leads to the trilogy’s final disaster. What Liu’s best human minds think is smart repeatedly turns out to be stupid.

The cognitive chasm between AIs and humans could well lead us into comparable mistakes. One remedy might be to enlist AIs to think smartly on our behalf. Unfortunately, this requires trusting AIs will be honest with us, a prospect about which Liu’s trilogy is very pessimistic. The universe, as Liu tells us, is a dark forest, a place where trusting the wrong voice can get you killed.

The Dark Forest

The universe is a dark forest. Every civilization is an armed hunter stalking through the trees like a ghost... If he finds other life—another hunter, an angel or a demon, a delicate infant or a tottering old man, a fairy or a demigod—there's only one thing he can do: open fire and eliminate them.

This is the Dark Forest Hypothesis, a core idea in the trilogy. It speaks directly to the central challenge of the AI alignment problem, a reality so unsettling it makes the world feel smaller and colder.

In a Dark Forest universe, civilizations must either hide or strike first because the intentions of strangers can never be known with certainty. The price of assuming a new intelligence is benign can be extinction. So, the only civilizations who survive are the ones who learn to strike preemptively, eliminating every new intelligent species they encounter.

In his “Author’s Postscript,” Liu rejects the “sentimental” assumption that advanced intelligences are likely to be bound by “noble, moral constraints.” He argues that humans haven't been constrained by such morals when they discover new groups on Earth, so it's foolish to assume that extraterrestrials will be any better behaved than we are.

AI is like encountering an alien intelligence. We can recognize parts of our own thinking in it, but we can't be certain of its intentions and goals. And even if we could, we can't be certain those goals won't change. Because an advanced AI might have the power to kill or enslave us, its very presence on Earth may always represent an existential risk.

The classic solution to the Dark Forest situation is to “kill first, kill fast, and kill completely.” It’s a brutal logic, but only total extermination can assure that a new intelligence won't eventually kill you. Even if an AI seems entirely benign at first, a hundred years from now it may develop goals that are harmful to our species.

We are, and will likely always be, separated from these super-intelligences by an immense cognitive gulf, like distant stars whose light we can see but whose nature we can never truly know.

What business is it of yours if I kill you?”, The Singer asks as it destroys entire star systems. In the Dark Forest, death is the price paid for life.

NOTE: Eric-Navigator offers a rebuttal to the Dark Forest Theory that addresses this post at: Dark Forest Theory Doesn't Work in Reality. What About a Thriving Human-AI Republic?

Before Times

Most of Liu’s trilogy takes place in the “before times,” in the years before the full Trisolaran invasion. How Liu’s humans used this time to prepare largely determined how the Trisolarans transformed human lives. We, too, are living in “before-times,” waiting to see what AGI or ASI will do. These technologies do not come with predetermined effects. How we act during this waiting time will largely determine what transformations superintelligences produce.

Liu’s humans had centuries to prepare, but that long waiting period brought both blessings and woes. Most experts agree we have much less time to wait, probably less than a century, with AGI by 2050 the most common guess.

In Liu’s trilogy, the humans had clear, visible evidence of the approaching invasion. They saw the Trisolaran fleet through their instruments, so most people across the planet understood they faced a common threat. We don't have this luxury. The evidence that we may one day share the Earth with advanced intelligences is much less straightforward.

We cannot see the AI revolution coming through telescopes. Understanding why intelligence systems are poised to make a giant leap requires specialized knowledge of computer science and machine learning, skills possessed by only a small minority.

People without this specialized knowledge must depend on experts to inform them about AGI and ASI, but trust in experts seems to be trending downward. Only a minority is currently listening to what the specialists are saying and beginning to think about the challenges ahead. Even within Silicon Valley, the disconnect is stark. While AI researchers publish papers titled "Existential Risk from AI," tech stocks soar and venture capital pours billions into AI startups as if humanity has nothing to worry about.

We live in before times then, but most of humankind does not know.

No Heroes, Only Survivors

In the Three-Body universe, there are no heroes, only survivors.

I started rereading the trilogy last month because I hoped to escape thinking so much about AI. Instead, Liu reminded me that escapism is, at best, a temporary strategy.

The future has never been a given. The only difference now is that we face a new set of choices about how to fight for what we love. Neither defeatism nor escapism nor nihilism are likely to improve our chances of flourishing. Much that we can do to prepare now won't be possible later.

Liu Cixin ends his trilogy not with a feeling of hope or despair, but with a simple recognition: the universe doesn't care about our survival. Only we do. The Trisolarans are coming, and this time, we're building them ourselves.

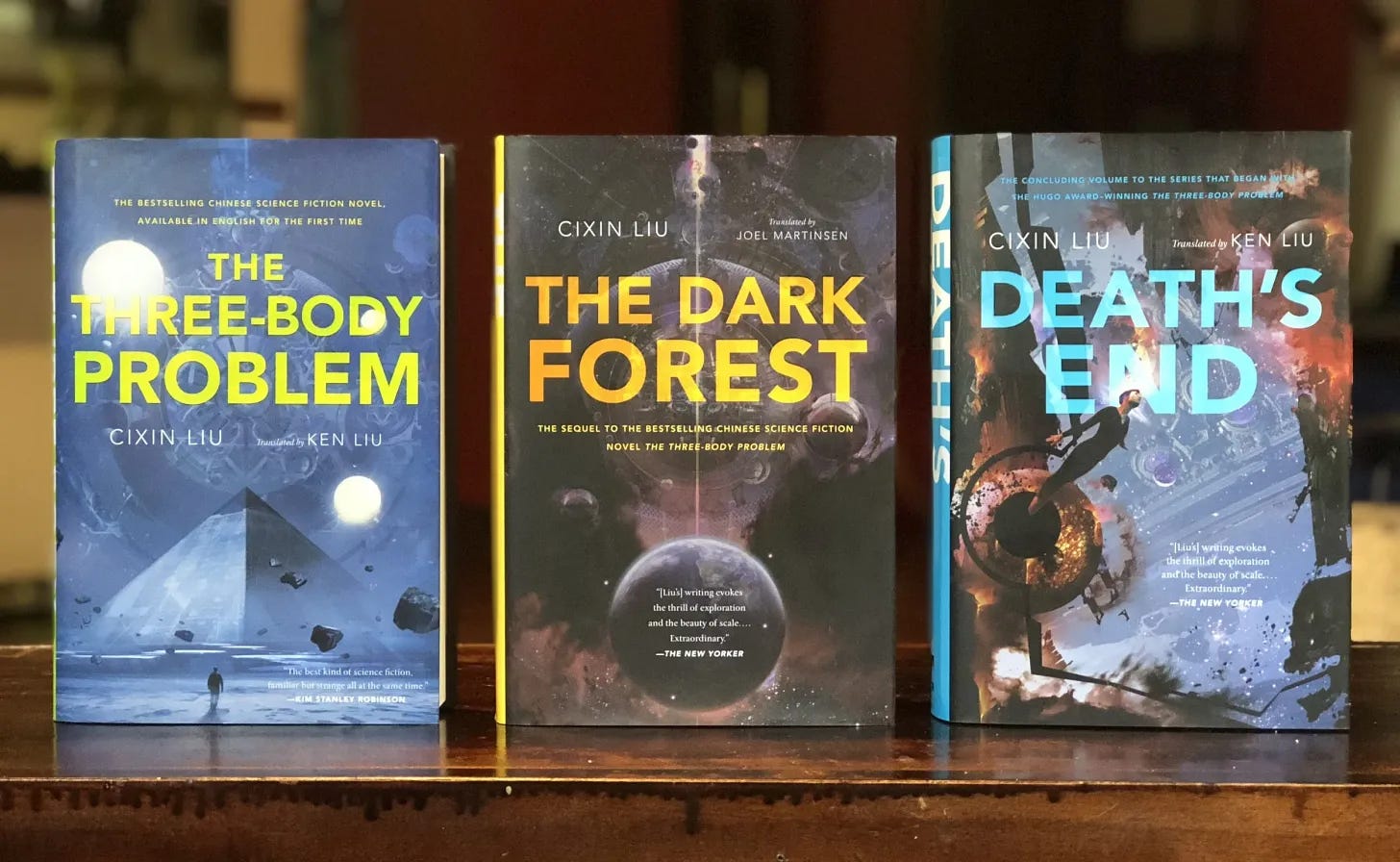

Sources: All quotations are from Liu Cixin. Remembrance of Earth's Past (trilogy). Translated by Ken Liu, Joel Martinsen, et al. Tor Books, 2014-2016.

Thank you! This is the first time I saw people putting AI doom and Three Body Problem together!

I am also a fan of Three-Body Problem. I thought about the Dark Forest Theory for a long time. I think it is very unlikely to be the dominant evolutionary rule of the universe.

The Question is: Would humans and AIs inevitably enter a Dark Forest war like in the science fiction Three Body Problems, attacking each other with ultimate hostility, a form of hostility beyond anything we’ve seen?

My answer is: No.

Dark Forest Theory requires two assumptions: all civilizations treat survival as the most fundamental goal, and all civilizations need territorial expansion for survival, but the total quantity of matter in the universe keeps constant, so civilizations are inevitably going into conflict. And there are two important concepts: chain of suspicion, and technological explosion.

The consequence is that every civilization tries to hide itself. And as soon as it sees other civilization exposing itself, it strikes hard and exterminate the other one, because it can’t risk the other civilization doing the same to itself.

However, this doesn’t really hold true. Let me show this via a "space political thriller" narrative. This is probably more likely to happen in reality than Liu Cixin's version.

For example, there is a civilization A (like Earth) and a civilization B (like Trisolarans). A sends B a greeting message. If B suspects A is a lot more advanced than B, B won’t start the attack because it will lose the war. If not, B may start the attack and try to exterminate A, but A may actually have a lot more advanced secret weapons which then destroy B. Both situations are disadvantageous for B.

Even if that's not the case, let's assume B wins the war, destroys A completely. If B is able to completely hide the true source of its attack from all external observers, like a cunning murderer, B finally wins for this round. But this forgets some really important problems. The problem of invisible alien spies, and the attack itself inevitably telling observers about its source, either via A's siren beacons or other observers' Sherlock Holmes style deduction.

The spy problem: It is always easier to destroy than defend something. It is always easier to plant at least one spy in a system than to eliminate all spies in a system. There may be multiple invisible spying detectors implanted in B by neighboring, highly advanced civilizations, even if those civilizations do not have any formal contact with B, and B don't even know their existence at all. That means those civilizations will know for sure that B started the war despite B's best intent to hide it. In the books, Trisolarans planted spies among humans. But Trisolarans themselves may be planted with spies from other civilizations.

The siren beacon problem: If there is an off-world colony of A who witnesses the destruction of A, even if it only has a microsecond before its own destruction, an automated doomsday space siren system will still send out records of the genocide to the surrounding space. And A may have billions of these siren beacons deeply hidden in space, making it impossible for B to completely eliminate all of them at the same time via an extremely coordinated surprise attack.

Advanced civilizations as Sherlock Holmes: Even if the siren beacons all fail, just like the detective Sherlock Holmes can trace subtle threads of evidence to identify the perpetrator, for a more advanced civilization, to say that B can perfectly hide its identity as the perpetrator of a interstellar genocide is quite unbelievable.

Given the presence of spies, siren beacons, and ultrasmart detectives, it is impossible for B to completely hide the fact that it is responsible for such a large-scale attack. Other observers will know about this war.

For neighboring civilizations like C, D and E, they will clearly know that A is a victim and B is a perpetrator. Assume the worse case, that C, D, E are still afraid of each other, sending spies to each other without any formal contact. Now, as they see A's destruction, they will realize that their best strategy is to unite against B to prevent themselves being the next target of B, because B may have secret spying devices around C, D, E as well, and B may be already planning for attacks as well. C, D, E may have doubts before the war, but now, they have seen how dangerous B is, and they must unite. And if they are much more advanced than B, they will be able to easily destroy B.

Imagining B contemplating all these possibilities before it starts an attack on A, a seemingly weak and innocent civilization. It becomes painfully clear that the attack is never as simple as it seems, due to the potentially secret power of A, and the possibility of all the invisible, unknowable, unimaginably advanced civilizations like C, D, E. Suddenly, attacking A isn't really a good idea any more. Perhaps a better idea will be sending back a message to A, beginning with, "Hello! Nice to meet you! What's your name?"

Therefore, there will be gradually be more communications and alliances forming in the universe, naturally emerged from chaos, just like Thomas Hobbes (British Philosopher, 1588-1679) has described in Leviathan: the war of everyone against everyone will end, and an order will rise. Paradoxically, the ultimate fear of mutual destruction actually brings uneasy peace. But this is not unforeseen. This is exactly what happened to the world after the nuclear weapons were invented in the 1940s.

Apart from this, civilizations may not always want to expand territories because they can’t hold existing territories well enough before they fracture due to the speed limit of communication. This is similar to how ancient empires stay a certain size for a long time. Instead of constant conquest, empires may stay about the same size for centuries, reusing the resources they already have, like the Roman Empire.

In conclusion, Dark Forest Theory does not hold true in reality.

Hello! I just published a consolidated version of my comments below. They are too long. I put them into a standalone article:

https://ericnavigator4asc.substack.com/p/dark-forest-theory-doesnt-work-in